"Science Vs" Cited Seven Studies To Argue There’s No Controversy About Giving Puberty Blockers And Hormones To Trans Youth. Let’s Read Them.

The show is strikingly selective in its skepticism

I was only able to do this work, which is very resource-intensive, because of my paying subscribers. If you find this article at all useful, please consider becoming one:

Science Vs, a leading science journalism podcast produced by Gimlet, is dedicated to cutting through misinformation and politicization and delivering its listeners the truth. The show “takes on fads, trends, and the opinionated mob to find out what’s fact, what’s not, and what’s somewhere in between.”

In March, it published an episode called “Trans Kids: The Misinformation Battle” that seriously misled listeners about the quality of the evidence for youth gender medicine — that is, puberty blockers and cross-sex hormones. Worse, the show engaged in some rather irresponsible fearmongering on the subject.

The exchange in question came during a conversation between host Wendy Zukerman and psychiatrist Jack Turban, who is one of the most commonly quoted and enthusiastic advocates for youth gender medicine. It originally went like this, according to the show’s transcript, with the discussion between Zukerman and Turban set in italics and points where Zukerman is addressing listeners directly set in plain text:

[Zukerman:] So overall, hormones have some risks, and they're not easily reversible – but the top dogs in this space, they’re all on board with this – not only hormones but puberty blockers too.

[Turban:] American Medical Association[115], the American Academy of Pediatrics[116], the American Psychiatric Association[117], the American Academy of Child and Adolescent Psychiatry[118], I could go on and on

[Zukerman:] Not controversial at all?

[Turban:] No

[Zukerman:] And the reason that it's not controversial is because – again – we need to look at what happens if you do nothing. Like you don’t allow your kid to go on hormones. And just last month - a study from Seattle was published looking at just this. It had followed about 100 young adults, and compared those who got this gender affirming care - to trans folks who didn’t.[119] And they found that while those who got this treatment ultimately felt better afterwards,[120] those who didn’t felt worse and worse.[121] And by the end of the study, those who got gender affirming care were 73% less likely to have thoughts of killing themselves or hurting themselves.[122] Other research suggests the same thing.[123][124][125][126][127][128]

Let’s linger on this for a moment. These treatments are utterly uncontroversial, Zukerman said, because of “what happens if you do nothing. Like you don’t allow your kid to go on hormones.” Following that was a summary of a recent study that — Zukerman claimed — found that access to gender-affirming medicine (henceforth GAM) led to reduced suicidality among young adults1.

Zukerman is clearly saying that if you, the parent listening, have a kid who wants to go on hormones, and you don’t put them on hormones, you risk raising the probability they will become suicidal and/or attempt suicide. This is a profoundly serious claim — an invocation of every parent’s worst nightmare — so one would hope that it’s backed by nothing but ironclad evidence.

But that isn’t the case. The study Zukerman referenced, which was published in a JAMA Network Open study by PhD student Diana Tordoff and her colleagues in February, didn’t come close to finding what its authors claimed. I explained its many crippling flaws here in April — the post is long, but if you want to understand how aggravating it is that mainstream science outlets are treating this research so credulously, you should read it. The short version is that, in a sample of kids at a gender clinic, those who went on GAM didn’t appear to experience any statistically significant improvement on any mental health measure (here’s a primer on what “statistically significant” means — it is going to come up a lot in this article). So Zukerman’s claim that “those who got this treatment ultimately felt better afterwards” was completely false, directly contradicted by the paper’s supplementary material.

In early May, Robert Guttentag, a UNC–Greensboro psychologist, bcc’ed me on a thoughtful email he sent to the show’s producers highlighting what he viewed as flaws with the episode. I forwarded the email to the show to make sure the producers saw it, and argued that they had badly misrepresented the Tordoff study. To the show’s credit, later in the month I got an email from the “Science Vs Team” in which they acknowledged they’d made an error: “We now realize we had misread the paper — which had found a difference between the treatment group and the control group, not a significant change before and after the treatment. We have adjusted the study description in our episode[.]”

Unfortunately, they also said they stood by the idea that this is a quality study that adds to the evidence base for youth gender medicine: “Thanks for bringing this to our attention. We chose to call out this paper because, while it isn’t perfect, it had some advantages to other studies looking at this. For example, it had a control condition and followed patients prospectively rather than retrospectively. Because it’s replicating the beneficial effect of gender-affirming healthcare that several other studies have found, we feel confident about the take-away overall.”

I’m glad the show issued a partial correction, but this is still dismaying. First, it’s worrisome that the Science Vs team thinks there was a genuine “control condition” in the Tordoff study that makes it stronger than previous research on the subject. There really wasn’t. It was a 12-month study that started with 104 kids, but by the final follow-up a grand total of seven kids were left in the no-medicine group:

As I noted at the time, “Overall, according to the researchers’ data, 12/69 (17.4%) of the kids who were treated left the study, while 28/35 (80%) of the kids who weren’t treated left it.” The authors offer no explanation as to why this was the case, no explanation why some kids went on blockers or hormones and others didn’t, and little reason for us to trust that any observed differences between the groups are attributable to accessing GAM rather than any of a host of other potential confounding factors. (In the course of writing this post, I noticed that I myself called the no-medicine kids a “control” group once in my April write-up, which was a mistake, but I just fixed it.)

The headline difference between the two groups’ outcomes — which really boiled down not to the treatment group improving but to the non-treatment group supposedly getting worse — was generated by highly questionable methodological choices, as I detailed at length. When I asked a leading expert on the specific statistical technique the authors used about this paper, he professed surprise they’d employed this technique, and raised a separate, potentially significant problem with their statistical model (basically, that the model factored in data from 17 kids — almost a fifth of the initial sample — who showed up at the clinic one time for an initial assessment and then dropped out of the study, despite the fact that their data can’t tell us anything about anything since the subjects weren’t tracked over time).

Again, see my post for all the details, but the majority of these points are fairly basic and don’t require much statistical sophistication to understand. This is an extremely weak study, and it definitely doesn’t have anything like a “control group” in the traditional sense. It isn’t a stretch to claim that it tells us nothing about the question at hand, other than that, according to the researchers’ own methods, kids who went on blockers and/or hormones in this particular clinical sample did not get less suicidal, depressed, or anxious over time. That’s a small, useful nugget of knowledge to add to our understanding of this issue, but it’s not an encouraging one for advocates of youth gender medicine.

The authors are quite opaque about their methods, and we could better understand what they found (and didn’t find) if they would share their data. That would allow other researchers to poke around, see which of their findings are robust to different methodological choices, and so on. Tordoff claimed in an email to me that her team “provided the raw data in the supplement for transparency,” but when I emailed her back to point out that no, the data weren’t available online, she stopped responding. A spokesperson for her university, the University of Washington–Seattle, subsequently confirmed to me that her team refused to share its data.

Despite all this, Science Vs continues to present this study to the public as solid recent evidence for the efficacy of puberty blockers and hormones. That’s bad and misleading science communication — irresponsible, I’d argue, given the seriousness of the subject and given Zukerman’s scary claim about the ramifications of not allowing kids to go on youth gender medicine. This study absolutely does not provide us with anything like a clean, statistically tenable comparison between kids who did and didn’t go on blockers or hormones.

Part of Science Vs’s argument for accepting the study despite its shortcomings is that it is “replicating the beneficial effect of gender-affirming healthcare that several other studies have found.” (Tordoff has claimed similarly: “Our study builds on what we have already seen from an already staggering amount of scientific research,” she told an outlet called Healthday News. “Access to gender-affirming care saves trans youth’s [sic] lives.”) This clashed with my own understanding of the evidence base here, at least when it comes to decently rigorous research, so it made me wonder about Science Vs’s overall approach to the issue. What studies did the show’s producers read that make them so confident that there’s a clear consensus here? I decided to take a close-bordering-on-obsessive look at the research in question.

As you can likely already tell, this is going to be a long post. My goal when I go this deeply on a subject is to produce work that will be useful and durable. Hardly anyone has closely compared the claims surrounding youth gender medicine to the research itself to see whether the two match. Science Vs offers us a useful opportunity here, because it’s a show that prides itself on its accuracy and rigor, and because (as we’ll see) it takes an admirably transparent approach that makes this sort of exercise easier.

If this article is too long for a lot of potential readers, so be it — it’s here if and when you find it useful, and I don’t think this issue is going away anytime soon.

But First, A Word On The “Top Dogs”

(This section is completely skippable if you are interested only in the question of what studies Science Vs used to support its claim that youth gender medicine is uncontroversial and what those studies actually say.)

I don’t want to be accused of ignoring Zukerman’s claim that the “top dogs” are all on board with youth gender medicine. After all, if all the experts really are on the same page about this, and have done their homework, I could be accused of nitpicking if I found weaknesses with Science Vs’s specific choices of study citations. Maybe there are a bunch of other studies, cited by the major authorities in this space, that do show there’s no controversy here.

Here’s what Science Vs said about those top dogs and the citations it used to defend its claims:

[Zukerman:] So overall, hormones have some risks, and they're not easily reversible – but the top dogs in this space, they’re all on board with this – not only hormones but puberty blockers too.

[Turban:] American Medical Association[115], the American Academy of Pediatrics[116], the American Psychiatric Association[117], the American Academy of Child and Adolescent Psychiatry[118], I could go on and on

In my experience, this is a common response to anyone who expresses qualms about the evidence for youth gender medicine. And in general, it’s surely better to trust major medical and psychological organizations than to reflexively distrust them. But this particular issue is complicated and politically fraught, and I’ve found that often, if you closely examine the documents published by these organizations in support of youth gender medicine — or in opposition to attempts to ban it (I am also opposed to such bans) — they fail to adhere to basic standards of accurate science communication and rigor. Their biggest problem is citational mischief: They make claims, and then link those claims to research that doesn’t actually support them.

I’m going to do only a brief treatment here that hopefully will show why I don’t take Science Vs’s top-dogs argument seriously: All of the documents here are either irrelevant or contain plainly misleading citations.

The American Medical Association document is “Health insurance coverage for gender-affirming care of transgender patients,” an “Issue Brief” coauthored by that organization and an organization called GLMA: Health Professionals Advancing LGBTQ Equality. A key claim in it: “Recent research demonstrates that integrated affirmative models of care for youths, which include access to medications and surgeries, result in fewer mental health concerns than has been historically seen among transgender populations.” The footnote points to this study, this study, and this study. None of the three studies includes any outcome data at all. It’s very bad form for the AMA — an organization that we would hope would adhere to the highest standards of evidence — to claim X, and then point to not one but three studies that offer no statistical evidence in support of X.

The American Academy of Pediatrics document is “Ensuring Comprehensive Care and Support for Transgender and Gender-Diverse Children and Adolescents,” a “Policy Statement” published in Pediatrics. It’s written to be a general rundown of these issues for medical providers, and it barely touches on the evidence question. It does include the claim that “There is a limited but growing body of evidence that suggests that using an integrated affirmative model results in young people having fewer mental health concerns whether they ultimately identify as transgender.24,36,37” That’s a strikingly similar sentence to the AMA/GLMA one. And sure enough, those three endnotes are… the exact same three citations, in the same order, as are found in the AMA document. You know, the ones that offer no evidence about the outcomes of kids who go through this protocol.

I can’t come up with any other explanation for the similarities in sentence structure, citations, and citation order between the AAP and AMA documents other than 1) the AMA document cribbed from the AAP document (which came out earlier), or 2) both documents adopted copy from some third source that was subsequently tweaked a bit. Either way, none of this suggests that a high level of critical, independent thinking went into these documents.

The American Psychiatric Association citation points to two documents. The first is “Best Practices” from “A Guide for Working With Transgender and Gender Nonconforming Patients.” This document has nothing to do with the debate at hand — there’s no mention of youth treatment anywhere. The “Medical Treatment and Surgical Interventions” section of the document, for example, deals entirely with adults, with not a puberty blocker in sight. So Science Vs is simply pointing us to an irrelevant citation.

The second APA document is “Position Statement on Treatment of Transgender (Trans) and Gender Diverse Youth.” That one includes the sentence “Trans-affirming treatment, such as the use of puberty suppression, is associated with the relief of emotional distress, and notable gains in psychosocial and emotional development, in trans and gender diverse youth.” The citation supporting that claim points to… oh, there isn’t one. Okay.

Finally, the American Academy of Child & Adolescent Psychiatry: That document is “AACAP Statement Responding to Efforts to ban [sic] Evidence-Based Care for Transgender and Gender Diverse Youth.” To repeat myself, I agree completely with the position expressed in the document (it can be true both that we don’t have a lot of great evidence for youth gender medicine and that having legislators ban it outright is a terrible idea likely to do far more harm than good), but again, there’s citational mischief.

The sentence “Research consistently demonstrates that gender diverse youth who are supported to live and/or explore the gender role that is consistent with their gender identity have better mental health outcomes than those who are not (3, 4, 5)” points to these three documents.

The first is a study that can’t provide any information on the question at hand (there is no comparison group of less-supported kids)2.

The second document is a study concerned with a group of 245 LGBT kids (of whom only 9% were trans) and that found, as you’ll see on Tables 2 and 3, no statistically significant links, among those trans kids, between family support and positive outcomes on six of the seven measures3. (This could be a sample size thing but a lot of the effect sizes are tiny and one of the odds ratios, on a suicide measure, points in the wrong direction.) The third is a Substance Abuse and Mental Health Services Administration document prepared by the author of the aforementioned study. It contains a bunch of citations, including to that study, which, as you’ll remember from five seconds ago, didn’t really show a link between family acceptance and well-being among the tiny group of trans kids studied.

To be clear, I’m not even skeptical of the claim that all else being equal, family support is generally linked to better outcomes among trans youth! I’m just saying these documents are written in a sloppy manner and that their citations often don’t come close to justifying the text to which they are affixed.

So yes, it is technically true that the “top dogs” have published documents supporting youth gender medicine. The quality of those documents is another story.

Back To Science Vs And These Studies On Blockers And Hormones

Like I said, Science Vs deserves credit for its transparency — it releases show transcripts that feature many endnotes ostensibly backing up its specific claims, which is something a lot of outlets don’t do.

The relevant, post-correction portion of the transcript for “Trans Kids: The Misinformation Battle,” which comes right after the “top dogs” claim, now reads:

And the reason that it's not controversial is because – again – we need to look at what happens if you do nothing. Like you don’t allow your kid to go on hormones. And just last month - a study from Seattle was published looking at just this. It had followed about 100 young adults, and compared those who got this gender affirming care - to trans folks who didn’t.[119] And they found that

while those who got this treatment ultimately felt better afterwards,[120]those who didn’t felt worse and worse.[121]And by the end of the study,those who got gender affirming care were 73% less likely to have thoughts of killing themselves or hurting themselves.[122] Other research suggests the same thing.[123][124][125][126][127][128]

I think the revised summary of the Tordoff study is still quite misleading in light of all the above issues, but setting that aside, you’ll see that Science Vs also cites not one but six other studies to justify its claim that Tordoff and her team basically just replicated an already-established finding. (Zukerman only mentions “allow[ing] your kid to go on hormones,” but I think it’s quite clear from context, and from the citations, that she’s talking about both hormones and blockers. The Seattle study is itself about both, and “hormones” is sometimes used loosely to refer to both treatments.)

Let’s list the papers in question and label them for ease of reference:

Study 1 [endnote 123]: Turban JL, Beckwith N, Reisner SL, Keuroghlian AS. Association Between Recalled Exposure to Gender Identity Conversion Efforts and Psychological Distress and Suicide Attempts Among Transgender Adults. JAMA Psychiatry. 2020;77(1):68–76

Study 2 [endnote 124]: Kuper LE, Stewart S, Preston S, Lau M, Lopez X. Body Dissatisfaction and Mental Health Outcomes of Youth on Gender-Affirming Hormone Therapy. Pediatrics. 2020 Apr;145(4):e20193006

Study 3 [endnote 125]: de Vries AL, McGuire JK, Steensma TD, Wagenaar EC, Doreleijers TA, Cohen-Kettenis PT. Young adult psychological outcome after puberty suppression and gender reassignment. Pediatrics. 2014 Oct;134(4):696-704

Study 4 [endnote 126]: Costa R, Dunsford M, Skagerberg E, Holt V, Carmichael P, Colizzi M. Psychological Support, Puberty Suppression, and Psychosocial Functioning in Adolescents with Gender Dysphoria. The Journal of Sexual Medicine. 2015 Nov;12(11):2206-14

Study 5 [endnote 127]: Achille C., Taggart T., Eaton N. R., Osipoff J., Tafuri K., Lane A., Wilson T. A. (2020). Longitudinal impact of gender-affirming endocrine intervention on the mental health and well-being of transgender youths: preliminary results. International Journal of Pediatric Endocrinology, 2020, 8

Study 6 [endnote 128]: Turban JL, King D, Kobe J, Reisner SL, Keuroghlian AS. Access to gender-affirming hormones during adolescence and mental health outcomes among transgender adults. PLOS ONE 17(1): e0261039

For each study, I’ll paste the exact language Science Vs included in its show endnotes, which is always just a quote from the study itself, and then explain what the study actually shows. The links within each quote, which the producers added, point to the papers themselves.

Study 1: “For transgender adults who recalled gender identity conversion efforts before age 10 years, exposure [to those efforts] was significantly associated with an increase in the lifetime odds of suicide attempts.”

This study has some pretty major flaws — I’m generally sympathetic to this letter to the editor critiquing it, which we’ll return to a bit later in a different context — but even setting that aside, it has nothing to do with gender-affirming medicine, which is the issue at hand. It’s irrelevant to this debate. (To be clear, I’m against any sort of genuine conversion therapy! But if you read the critique, you’ll see that this study might not be accurately capturing the subset of kids who experienced it.)

Study 2: “Lifetime and follow-up rates were 81% and 39% for suicidal ideation, 16% and 4% for suicide attempt[.]”

This is a study of kids who went through a Dallas gender clinic to obtain puberty blockers and/or hormones. (The New York Times’ news podcast The Daily did a really good two-part series on what’s going on in Texas, including the state’s Republican leadership’s disgraceful efforts to shut this clinic down — though, thankfully, a recent court order partially and temporarily reopened it.) There’s no control/comparison group, so the researchers examined change over time as the cohort began taking blockers and/or hormones.

The Science Vs producers’ choice of quote suggests they believe this sample became less suicidal after going on GAM — if not, I don’t know why they would include this citation and this quote in this context. But I’m not sure they read the paper; if they did, they’d realize they’re referencing the wrong comparison. After all, what matters most is the change in suicidality before versus after treatment, not how either figure compares to lifetime suicidality.

Here’s the authors’ rundown, from Table 5, “Suicidal Ideation, Suicide Attempt, and [Nonsuicidal Self-Injury]”:

There appears to be a minor error; assuming the chart is correct and the abstract is incorrect, the language in the abstract should have read “Lifetime and follow-up rates were 81% and 39 38% for suicidal ideation, 16 15% and 4 5% for suicide attempt[.]”

If you do compare the suicide/self-harm evaluations conducted at baseline versus at follow-up, at first glance it appears the kids got worse, since all the numbers and percentages increase from the middle column to the right column. But in reading the paper and running it by a couple of social scientists, I couldn’t quite figure out whether the question was asked in an apples-to-apples way that allows for comparison between the two time periods, so I reached out to two of the authors, Laura E. Kuper and Sunita Stewart, for an explanation.

Via a spokesperson, they confirmed that no, you can’t really compare the middle column to the right one:

We cannot say from these data whether there was a change in the number of youth who reported suicide ideation and NSSI during the study period. The time frames of comparison are not equal. The follow-up period was an average of 15 months after the initial evaluation, and the time-based report at initial evaluation (the middle column in the table) was for a briefer period of between 1 (for ideation and NSSI) and 3 months (for attempt). For this reason, no statistical comparisons would have been appropriate.

If you can’t make any statistical claims about the meanings of these numbers, why include, in your abstract, the line “Lifetime and follow-up rates were 81% and 39% for suicidal ideation, 16% and 4% for suicide attempt”? I’d argue this is slightly disingenuous — call it a misdemeanor — because readers are likely to misinterpret this as a statistical claim, as a decrease caused by the medicine. (It also obscures the fact that the gap between the lifetime and baseline suicidality percentages is even bigger — it appears the sample’s suicidality issues had mostly resolved by the time they got to the clinic.)

Anyway, the point is we can’t determine anything about suicidality here because the three columns are asking such different questions about suicidal ideation, suicide attempts, and nonsuicidal self-injury: whether the subjects had experienced any of these things at any point in their life, whether they’d experienced them in the last 1–3 months, and whether they’d experienced them between intake at the clinic and their follow-up appointment (a span that ranged from 11 to 18 months, depending on the kid). If you ask someone if they’ve done X in the last month, and then you ask them if they’ve done it in the last ten months, it’s probably more likely they’ve done it in the last ten months simply because that’s a much longer period of time. Contrary to what Science Vs is clearly implying, there isn’t useful data here.

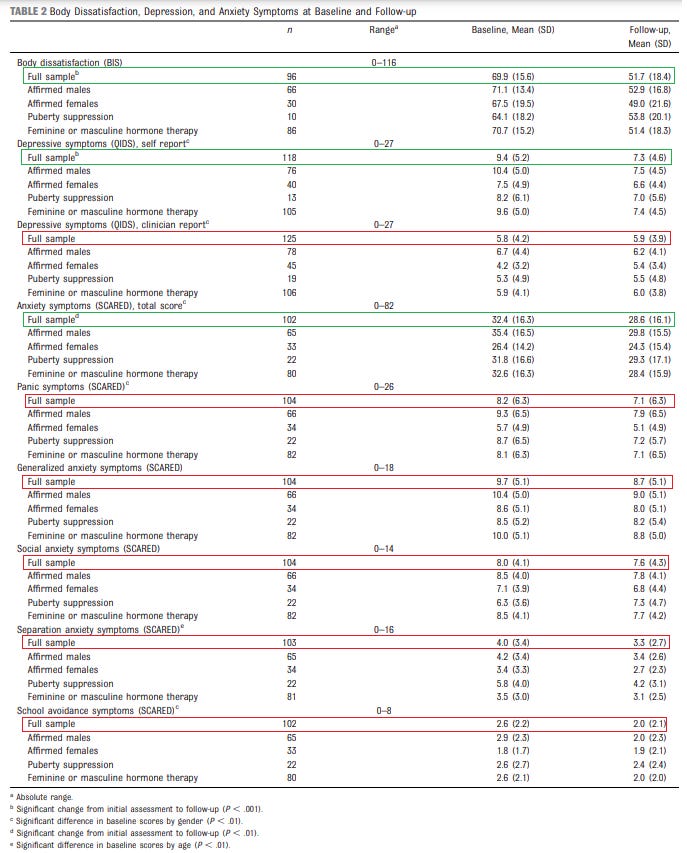

What about the rest of the study’s findings? It’s very hard to know what to make of them, to be honest. I’m tempted to say this study doesn’t really tell us much about whether blockers and hormones improve trans kids’ mental health, and that maybe it can’t given certain characteristics of the cohort. In the abstract, the researchers note: “Youth reported large improvements in body dissatisfaction (P < .001), small to moderate improvements in self-report of depressive symptoms (P < .001), and small improvements in total anxiety symptoms (P < .01).” “Small to moderate improvements in self-report of depressive symptoms” somewhat obscures the fact that measured another, more rigorous way — via clinician evaluation — the kids in the study did not experience an improvement in their depressive symptoms over time.

I feel pretty strongly about favoring clinician report rather than self-report, but even if you disagree with me, it won’t change the overall picture much. The researchers used an instrument called Quick Inventory of Depressive Symptomatology, which indeed has self- and clinician-reported versions. This paper notes that “Total QIDS scores range from 0 to 27… with scores of 5 or lower indicative of no depression, scores from 6 to 10 indicating mild depression, 11 to 15 indicating moderate depression, 16 to 20 reflecting severe depression, and total scores greater than 21 indicating very severe depression.”

Whether you trust the self-report or the clinician report version more, it’s the same basic deal in this study: The kids entered the study with “mild” depressive symptoms, they went on blockers or hormones, and they exited the study with… “mild” depressive symptoms. Yes, by self-report there was a statistically significant drop from 9.4 to 7.3, while by clinician report the kids went from 5.8 to 5.9 (not statistically significant), but all these scores are in the 6–10 “mild depression” range, and I think it’s fair to ask whether even the self-report change is clinically significant. Depression-wise, these kids were almost fine and they stayed almost fine:

By clinician report (on the right), a full 85% of the sample either had “not elevated” or “mild” depression symptoms at baseline. There wasn’t much room for them to get better, so we can’t say much about the lack of change.

If we zoom out on Table 2, we’ll see that the researchers got before and after measurements for nine mental health variables in total, and that the other results, too, are hard to interpret. Six of those variables are from the 41-item Screen for Child Anxiety Related Disorders (SCARED) instrument: each kid’s full score, as well as their subscores for panic symptoms, school avoidance, and generalized, social, and separation anxiety.

Here’s what happens if we go through the table and put green around each statistically significant change the “Full sample” experienced over time (the authors don’t report any statistically significant changes among any of their subgroups), and red around every nonsignificant finding:

So out of nine variables, there were three that improved, to a statistically significant degree, over time. Reading from top to bottom on the table, the first was body dissatisfaction, where there was a genuinely sizable reduction. The second was self-reported depression, which we just discussed. The third is anxiety symptoms as measured by the SCARED scale, which went down by 3.8 points on an 82-point scale. “A total score of ≥ 25 may indicate the presence of an Anxiety Disorder,” so the kids started above this cutoff (32.4, on average) and ended… above this cutoff (28.6), just by a smaller margin. In much the same way the kids started with “mild” depression symptoms and ended the study with “mild” depression symptoms, they started the study a bit over the “may have an anxiety disorder” threshold and they ended it in that same range. I’d ask the same question about clinical significance here that I did about the depression scores.

It’s tough to know what to make of the subscale variables in particular. There’s just a huge amount of room for subjective interpretation. For something like school avoidance, where the kids scored a 2.6 at intake and the threshold for concern is 3 (on an eight-point scale), what should we make of the lack of statistically significant improvement? It’s hard to do that much better than a 2 (say) on such a small scale. Maybe there’s stronger evidence we should be concerned about the social anxiety disorder numbers: The cutoff there is 8 on a 14-point scale, which is exactly what the kids scored at intake, and there was no statistically significant improvement over time. This is all a pretty mixed bag, because on the one hand you don’t really see any statistically significant drops, but on the other some of the baseline numbers were low enough so that there isn’t much room for improvement.

In light of this, an honest skeptic of the claim that youth gender medicine improves trans kids’ mental health should acknowledge that the nonsignificant results are hard to interpret and not always so damning; similarly, an honest believer in this claim should acknowledge that there isn’t much here to support their opinion. Unless you engage in cherry-picking, I don’t think this study provides anything close to clear evidence one way or the other.

I am very glad the Dallas team is doing this research and wish many more American gender clinics, who are embarrassing laggards when it comes to producing useful data, would follow their lead (while we’re on the subject, I wish Republicans didn’t pose existential threats to youth gender clinics). But it’s an oversimplification to say that this study offers real evidence blockers and hormones improve kids’ mental health. If you’re going to make that claim, where’s the impressive improvement and why’s there so much red in that chart above? If your response to that is, “Well, the kids didn’t have much room to improve,” then you shouldn’t have claimed that they did in the first place!

Maybe the studies get clearer? Maybe there’s higher-quality and more impressive evidence forthcoming?

Study 3: “[125] After gender reassignment, in young adulthood, the GD [gender dysphoria] was alleviated and psychological functioning had steadily improved.”

In some circles this is simply known as the “Dutch study.” It’s that important and well-known, and I’ve referenced it as some of the best evidence we have for the efficacy of these treatments. It’s definitely the best study cited by Science Vs, and it shows by young adulthood, the kids in this cohort had solid mental health.

So it should tell us something that even here, there are some pretty big questions. They’re well summed up by this article in the Journal of Sex & Marital Therapy by Stephen B. Levine, E. Abbruzzese, and Julia W. Mason:

While the Dutch reported resolution of gender dysphoria post-surgery in study subjects, the reported psychological improvements were quite modest. Of the 30 psychological measurements reported, nearly half showed no statistically significant improvements, while the changes in the other half were marginally clinically significant at best. The scores in anxiety, depression, and anger did not improve. The change in the Children’s Global Assessment Scale, which measures overall function, was one of the most impressive changes—however it too remained in the same range before and after treatment. [citations omitted]

The “Dutch approach,” at least as it was practiced during the time period covered by this 2014 study, involved careful screening of subjects. Back then this protocol was truly novel, and the clinicians really were interested only in giving blockers and hormones, followed by surgery, to young people with long, persistent histories of childhood gender dysphoria who didn’t have other major problems with mental or physical health, or lackluster family support, that might stymie their transitions. They thought these were the young people who had the best chance of living happy, healthy lives as trans adults. They also discouraged childhood social transition, because in their experience most kids’ gender dysphoria dissipated as puberty approached (the Dutch approach is designed to put kids on a medical track once it appears clear the GD is unlikely to desist).

As a result, the study has some of the same issues as the last one: The kids in this cohort who went on blockers and hormones, and who later received surgery, started out rather mentally healthy, which means, again, they didn’t have much room to get better, which makes these results difficult to apply to the present debate. For example, the authors note that the Beck Depression Inventory, which they administered to their subjects, has a scoring range of “21 items, 0–3 range,” or 0–63 for the total score. At baseline, the kids in the study had a BDI score of 7.89, which sits in the range of “These ups and downs are considered normal.”

Levine and his coauthors flatly argue that “The study cannot be used as evidence that these procedures have been proven to improve depression, anxiety, and suicidality.” It’s hard to dispute that — the subjects weren’t depressed or anxious at baseline, and suicidality wasn’t even measured, so how could the study offer such evidence? It’s good that the subjects’ mental health didn’t worsen over time, and certainly it would be a bad sign if kids going through these treatments suddenly developed new mental health problems, but this cohort can’t really tell us much about whether GAM will improve the mental health of kids who are in anguish.

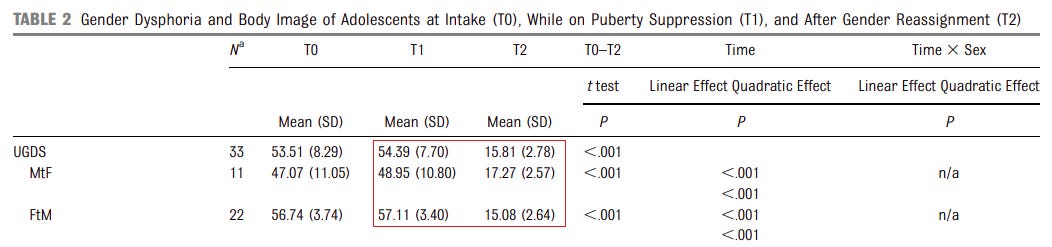

One of the genuinely impressive-seeming findings is that the Dutch subjects’ high-at-baseline gender dysphoria “was alleviated” at follow-up. But there’s a catch there, too, according to Levine and his coauthors. It involves the measure the researchers used, the Utrecht Gender Dysphoria Scale:

This 12-item scale, designed by the Dutch to assess the severity of gender dysphoria and to identify candidates for hormones and surgeries, consists of “male” and “female” versions. At baseline and after puberty suppression, biological females were given the “female” scale, while males were given the “male” scale. However, post-surgery, the scales were flipped: biological females were assessed using the “male” scale, while biological males were assessed on the “female” scale. We maintain that this handling of the scales may have at best obscured, and at worst, severely compromised the ability to meaningfully track how gender dysphoria was affected throughout the treatment. [citations omitted]

So after the trans boys went on blockers, took testosterone, and had double mastectomies, they were given an instrument with prompts like “My life would be meaningless if I had to live as a boy”; “I hate myself because I am a boy”; and “It would be better not to live than to live as a boy.” It would be shocking if someone who put this much effort into transitioning to a boy/man answered these questions in the affirmative. This seriously calls into question one of the best results the Dutch team got:

Another problem is that this research doesn’t control for the effects of counseling or pharmaceuticals. This is a cohort of young people who were in contact with a multidisciplinary gender clinic for years, and who had regular access to counseling, so it stands to reason that at least some of the improvements they experienced might be attributable to factors separate from blockers, hormones, and surgery (the previous study I wrote about, out of Dallas, did adjust for these variables). Clearly the Dutch clinicians themselves thought these factors bolstered their clients’ mental health, or they wouldn’t have made regular counseling a prerequisite for transitioning. The study doesn’t account for that, leaving us to speculate about the role of factors distinct from youth gender medicine itself, including simply getting older: The average age at first assessment was 13.6, and the average age of final assessment was 20.6. A lot of people’s mental health improves on its own during this particular seven-year span. (During other seven-year spans, like, say, 7 to 14, people’s mental health is more likely to worsen. Puberty is a hell of a thing!)

It’s worth pausing here to note just how much more conservative the Dutch approach is than what is presently favored by the most enthusiastic American activists and clinicians, including two of the voices interviewed in the Science Vs segment (Jack Turban and Florence Ashley). While I understand why people latch on to one of the few solid-quality studies we have, even setting aside the aforementioned methodological challenges, it is a stretch to claim that because the Dutch got some pretty good results, their research is applicable to American clinics dedicated to far less “gatekeeping.” (I don’t like using that word in the context of minors, since adults have a responsibility to gatekeep, but that’s the common terminology.)

The Dutch approach is absolutely out of fashion in many American youth gender clinics. There are no zoomed-out data on how these clinics go about their business, because no one has bothered to try to gather any (not an easy task, to be fair), but if you look around at the clinicians who have big online platforms, get quoted in major outlets, etc., you will see that they continually emphasize the need for clinicians and parents to engage in less gatekeeping and more trusting tweens and teens to know accurately what medical treatments are best for them. There are some who clearly support more careful, Dutch-style multidisciplinary approaches that include a lot of psychological assessment — Laura-Edwards Leeper and Erica Anderson are the two most famous examples — but this is not the trajectory of things in the States at all.

On a related note, there’s some evidence that the profile of kids seeking youth gender medicine is changing. In fact, writing in Pediatrics in 2020, the 2014 study’s lead author, Annelou de Vries, raised the question of “whether the positive outcomes of early medical interventions also apply to adolescents who more recently present in overwhelming large numbers for transgender care, including those that come at an older age, possibly without a childhood history of [gender incongruity/dysphoria].” The same could be said of what appears to be a growing number of kids who arrive at gender clinics with complicated psychological comorbidities. Since a kid who lacked childhood GD or who had serious psychological problems (or both) would have been disqualified outright from the 2014 Dutch study, that study is likely inapplicable to these different populations of kids going on blockers and hormones today, and to clinics that follow different procedures.

I’ll leave it at that — I did find Levine and his coauthors’ article to be an informative and thoughtful example of youth gender medicine skepticism. Overall, once you acknowledge the caveats, the Dutch study does show that a cohort of very carefully screened gender dysphoric kids with a lot of family and mental health support who went on blockers and hormones, and who got surgery, appear to have been doing pretty well in early adulthood. I just don’t think the results can be used to support or attack Zukerman’s claim in light of the sample biases. I also don’t think this finding really matches Science Vs’s stance on the issue of youth gender medicine more broadly, because, based on the show’s work in this area, it seems highly unlikely it would ever discourage social transition, or would support “a comprehensive psychological evaluation with many sessions over a longer period of time” prior to the commencement of any physical interventions, as the Dutch described their protocol.

Study 4: “[126] At baseline, GD adolescents showed poor functioning with a CGAS [Children’s Global Assessment Scale] mean score of 57.7 ± 12.3. GD adolescents’ global functioning improved significantly after 6 months of psychological support (CGAS mean score: 60.7 ± 12.5; P < 0.001). Moreover, GD adolescents receiving also [sic] puberty suppression had significantly better psychosocial functioning after 12 months of GnRHa [puberty blockers] (67.4 ± 13.9) compared with when they had received only psychological support (60.9 ± 12.2, P = 0.001).”

This isn’t good. As the Oxford sociologist and frequent critic of youth gender medicine research Michael Biggs pointed out in a letter to The Journal of Sexual Medicine, which also published this study, the methodology here is completely incapable of isolating the effects of puberty blockers and hormones.

The authors, based at the world-famous Gender Identity Development Service (GIDS) in Great Britain, adopted a version of the Dutch approach that’s quite heavy on psychological assessment and addressing transition-readiness concerns (though it looks like far more of the kids in this sample had already socially transitioned than in the Dutch one). Again, I don’t think Science Vs really supports this approach.

The main problem with drawing any conclusions from this study is that the researchers compared one group of kids who started puberty blockers relatively soon after entering the study with another that didn’t start blockers at all during the study (they did later) because, in the views of the assessing clinicians, they were experiencing “possible comorbid psychiatric problems and/or psychological difficulties.”

The two groups were never the same at baseline — one had psychological problems the other didn’t. At baseline, the psychologically worse-off group scored a bit lower on the study’s main measure, the Children’s Global Assessment Scale, than the psychologically better-off group, though the difference wasn’t statistically significant (the researchers didn’t administer other scales, such as ones measuring anxiety or depression symptoms, that seem likely to have revealed other differences between the groups). Then, at the end of the study, after one group but not the other had been on blockers for awhile… the same deal: The group with the psychological problems scored a bit worse-off, but the difference wasn’t statistically significant, “possible [sic] because of sample size,” explain the authors.

The sample size is fairly low by the end of the study in part because about 65% of the subjects disappear along the way, with no explanation from the authors about this giant drop-off. The apparent dropouts are distributed almost exactly evenly between the blockers and no-blockers groups, so we don’t have the differential attrition rate concerns we had in the study out of Seattle I wrote about in April, but the overall rate is still quite high:

You can’t have your sample shrink by 65% and then offer no explanation as to why that happened!

Sample size and other issues aside, while the statistically insignificant difference in the CGAS scores of the blockers and no-blockers groups did widen slightly between the penultimate and final assessment points, there’s no way to evaluate what role puberty blockers played in this result, simply because one group — but not the other — was also dealing with apparently serious mental health problems. On top of all that, the researchers didn’t account statistically for access to counseling, meaning we can’t even say with certainty that the blockers, rather than the counseling, could at least partially explain the overall CGAS improvement over time, which after all was seen both in the blockers and non-blockers groups. (The authors do note that simply “getting older has been positively associated with maturity and well-being,” and that that could be a factor here.)

Biggs pointed out an additional red flag to me: One of the primary measures the researchers use is the aforementioned Utrecht Gender Dysphoria Scale also employed by the Dutch. The researchers note that this was one of their measures, provide the baseline data, and then… poof. The UGDS disappears from the paper. We never find out what the follow-up numbers were. Why? Surely the point of administering this scale was to track the severity of gender dysphoria over time? It seems very unlikely to me that if they got impressive results they wouldn’t have reported them, though on the other hand if they’re seeking to hide their UGDS results, why mention having administered the scale at all? This is just very weird and amateurish science, to mention the scale, provide the baseline readings, and then sort of wander off to look at a butterfly. I emailed coauthor Rosalia Costa on June 3 to ask about this, and then nudged her on June 6, adding Polly Carmichael, but I didn’t hear back. (I’d also be remiss if I didn’t point out that another, more recent study of GIDS patients coauthored by Carmichael came out in 2021 in PLOS ONE, and it found no over-time improvements on any mental health measures among a subset of kids who went on blockers. This paper goes uncited by Science Vs.)

Wendy Zukerman’s claim, you’ll recall, was about “what happens if you do nothing. Like you don’t allow your kid to go on hormones.” Meaning: They will suffer. One way to interpret this study is that it offers no evidence for or against her claim because the core comparison is so broken. Another interpretation — one that’s less charitable but technically correct — is that this study directly contradicts Zukerman:

The red line — representing kids who sought blockers but weren’t able to access them during this time period — goes up. It is at a higher point at Time 3 than it is at Time 0 (though all the statistically significant improvement happens from T0 to T1). So what happens if you don’t let kids go on blockers in this study? On the one measure of these kids’ psychological well-being where the researchers bother reporting their follow-up data, they get better.

To be crystal clear, I am not endorsing the idea of capriciously withholding puberty blockers from kids with severe and persistent gender dysphoria. I’m simply pointing out how silly it is to cite this study in support of the idea that if you do withhold these treatments, terrible things will happen. This study doesn’t show that.

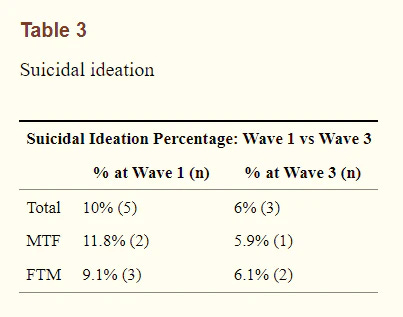

Study 5: “[127] Between 2013 and 2018, 50 participants (mean age 16.2 + 2.2 yr) who were naïve to endocrine intervention completed 3 waves of questionnaires. Mean depression scores and suicidal ideation decreased over time while mean quality of life scores improved over time.”

This quote is from the “Results” section of this paper’s abstract. Let’s look at the entire paragraph in question, with the part that Science Vs didn’t include in its notes bolded:

Between 2013 and 2018, 50 participants (mean age 16.2 + 2.2 yr) who were naïve to endocrine intervention completed 3 waves of questionnaires. Mean depression scores and suicidal ideation decreased over time while mean quality of life scores improved over time. When controlling for psychiatric medications and engagement in counseling, regression analysis suggested improvement with endocrine intervention. This reached significance in male-to-female participants.

So when the researchers, to their credit, controlled for the stuff that should be controlled for, endocrine interventions (meaning blockers and hormones) weren’t linked to any statistically significant improvements among female-to-male participants — a full two-thirds of the sample (33 female-to-male, 17 male-to-female, say the researchers).

Here’s a table laying that out, looking at three psychological scales the researchers used to measure the well-being of the study’s subjects:

The researchers made 12 statistical comparisons, and a grand total of one of them came back statistically significant by the traditional P < .05 threshold, while four more approached that threshold. And there is almost nothing on the right side of the table offering any evidence that these powerful medical treatments benefited the mental health of the female-to-male transitioners, once access to counseling and pharmaceuticals were taken into account.

For transparency’s sake, I should note that the authors have an explanation for their weak findings: “Given our modest sample size, particularly when stratified by gender, most predictors did not reach statistical significance. This being said, effect sizes (R2) values were notably large in many models. In [male-to-female] participants, only puberty suppression reached a significance level of p < .05 in the [Center for Epidemiologic Studies Depression Scale Revised]. However, associations with [Patient Health Questionnaire 9-item] and [Quality of Life, Enjoyment, and Satisfaction Questionnaire – Short Form] scores approached significance. For [female-to-male] participants, only cross sex hormone therapy approached statistical significance for quality of life improvement (p = 0.08).”

I’m glad the researchers broke things out by natal sex — it’s ridiculous that so many youth gender medicine researchers continue to fail to do so given that testosterone and estrogen are completely different substances with completely different effects (more on this in a bit). But once they did, they were left with almost no evidence these treatments helped. I guess there are a few promising blips on the male-to-female side, but among the female-to-male transitioners who made up two-thirds of this study? There’s basically no evidence that they benefited at all from blockers or hormones. In this sample, by these methods, on average they would have been just as well off solely receiving counseling and medication (if indicated) for their mental health symptoms.

Let’s imagine Science Vs were evaluating a study not of puberty blockers and hormones, but of a novel treatment for coronavirus promoted by Joe Rogan. Let’s also imagine that the authors of that study published a study evaluating the treatment in which they argued, “Well, we didn’t reach statistical significance in most of our tests, but we had a small sample size. Plus, there are some potentially promising results in a subgroup that comprised one-third of our sample.” It goes completely without saying that Science Vs would describe this as a weak finding that should nudge us toward skepticism, not acceptance, of the treatment in question. Why do different standards apply here?

Interestingly, this is the second time I’ve seen a major, respected, cut-through-the-bullshit science outlet treat this study in a strikingly credulous manner — Steven Novella and David Gorski commited a similar error on their website, Science-Based Medicine, which has had its own Nordberg-in-the-opening-scene-of-The-Naked-Gun-level issues covering this subject accurately. It should tell us something that when it comes to the youth gender medicine debate, some of the leading, supposedly skeptical voices are making the exact same sorts of mistakes in the exact same direction, over and over and over. They never make mistakes the other way — they never falsely understate the strength of the evidence for puberty blockers and hormones.

I already covered the suicidal ideation claim from this paper when I wrote about SBM’s coverage. Yes, the researchers themselves note in the abstract that “[S]uicidal ideation decreased over time,” but right there in the paper they also note that “Regression models for suicidal thoughts were not estimable due to the low frequency of endorsement and small cell sizes across gender.”

There was just so little suicidal ideation here that there’s no way to make the appropriate statistical comparisons:

So this paper provides no causal evidence about the effects of blockers and hormones on reducing suicidal ideation.

Study 6: “[128] Jack Turban’s paper— After adjusting for potential confounders, accessing GAH during early adolescence (aOR = 0.4, 95% CI = 0.2–0.6, p < .0001), late adolescence (aOR = 0.5, 95% CI = 0.4–0.7, p < .0001), or adulthood (aOR = 0.8, 95% CI = 0.7–0.8, p < .0001) was associated with lower odds of past-year suicidal ideation when compared to desiring but never accessing GAH. [GAH = gender affirming hormones]”

I’m going to go deep on this PLOS ONE paper because it’s very recent (January of this year), very influential, and I have some new developments to report about it.

Turban and his colleagues’ method, both here and in another highly touted study they published in Pediatrics in 2020, is to take the subset of 2015 U.S. Transgender Survey respondents who recalled having ever wanted puberty blockers or hormones, and to then compare the mental health of those who recalled having accessed them to those who recalled never having done so. (The 2020 study covers blockers and this one covers hormones.)

There are many reasons to be skeptical that the USTS can provide us with any generalizable data about trans people in the U.S. This is a very nonrepresentative sample that wildly differs from past attempts to generate decent data about the American transgender population (which, to be clear, is no easy task).

Here’s a chart from the letter to the editor I mentioned earlier critiquing Turban et al.’s conversion therapy paper, which was also based on the USTS. In it, the authors note the differences between the trans people surveyed in the USTS and those included in the 2014 to 2017 data from the Behavioral Risk Factor Surveillance System (BRFSS), a more rigorously conducted effort administered by the Centers for Disease Control and Prevention:

There are some gigantic differences here on crucial questions like age (the USTS skews very young compared to the BRFSS, with 84% of the respondents in the former and just 52% in the latter aged 18 to 44) and education (the USTS skews much more educated, with 47% college/technical school graduates in that sample versus 14% in the BRFSS).

The authors of the critique of Turban and his colleagues’ conversion therapy paper argue, credibly, that these differences may have arisen because “the participants [in the USTS] were recruited through transgender advocacy organizations and subjects were asked to ‘pledge’ to promote the survey among friends and family.” This claim jibes with the survey administrators’ own description of their outreach efforts.

So there’s a serious risk that the USTS skews heavily toward younger, more politically engaged, more highly educated members of the trans community. In much the same way sampling Jewish Americans at Chabad houses or black Americans at NAACP meetings definitely wouldn’t provide you with data you could safely extrapolate to the broader Jewish or black populations, something similar is almost certainly going on with the USTS.

The authors of the letter also highlight issues that go beyond mere sampling concerns:

A number of additional data irregularities in the USTS raise further questions about the quality of data captured by the survey. A very high number of the survey participants (nearly 40%) had not transitioned medically or socially at the time of the survey, and a significant number reported no intention to transition in the future. The information about treatments received does not appear to be accurate, as a number of respondents reported the initiation of puberty blockers after the age of 18 years, which is highly improbable (Biggs, 2020). Further, the survey had to develop special weighting due to the unexpectedly high proportion of respondents who reported that they were exactly 18 years old. These irregularities raise serious questions about the reliability of the USTS data.

If anything, the authors are understating the puberty blockers issue. As the USTS researchers explain in an endnote:

Although 1.5% of respondents in the sample reported having taken puberty-blocking medication, the percentage reported here reflects a reduction in the reported value based on respondents’ reported ages at the time of taking this medication. While puberty-blocking medications are usually used to delay physical changes associated with puberty in youth ages 9–16 prior to beginning hormone replacement therapy, a large majority (73%) of respondents who reported having taken puberty blockers in Q.12.9 reported doing so after age 18 in Q.12.11. This indicates that the question may have been misinterpreted by some respondents who confused puberty blockers with the hormone therapy given to adults and older adolescents. Therefore, the percentage reported here (0.3% or “less than 1%”) represents only the 27% of respondents who reported taking puberty-blocking medication before the age of 18.

In other words, so many respondents wrongly said they took puberty blockers that the test’s architects simply had to toss the vast majority of the affirmative responses to this question. Okay. Why should we trust that everyone else in the survey had a firm grasp on which medication they had taken? And why should we trust that when people said they wanted blockers or hormones, they weren’t mixing up the two? No one will say, but researchers keep publishing studies based on this data set, perhaps because there are so few options for big samples of American trans people. I think there’s a case to be made that the USTS data set is simply too broken to tell us much of anything about trans people in the U.S., but I’ll leave that question to the survey methods experts (if you are one, I’m genuinely curious to get your thoughts.

Either way, it’s hard to count all the red flags inherent to Turban and his colleagues’ methodology. Obviously, the problem with USTS respondents not knowing accurately what medicine they took is potentially crippling on its own, because if we can’t even verify that the people who said they took a medicine did so, how can we say anything about that medicine’s effects on them? It’s also problematic to assume that because someone reports having ever wanted puberty blockers or hormones — the survey item on which this entire methodology hinges — it was a persistent and realistic desire. That question is phrased: “Have you ever wanted any of the health care listed below for your gender identity or gender transition? (Mark all that apply)” [bolding in the original]. If a USTS respondent selected “puberty blocking hormones” because at age 24, long after they were eligible for blockers, they went through a phase when they thought maybe their life would have turned out better if they’d gone on them, they’d be entered into the wanted-blockers “pile” and treated as equivalent to someone who wanted blockers at 13. These are very different situations, though. Nothing in the USTS even tracks whether the kids who wanted blockers or hormones had gender dysphoria, which is the most basic prerequisite for going on these medications, at least in the case of competent clinicians who follow the appropriate standards of care. (I don’t understand why the survey would collect data from respondents on whether they’d been diagnosed with HIV but not ask about gender dysphoria, or gender identity disorder as it was probably known at the time for most of the respondents. It seems important, for many reasons, to try to establish what percentage of people who identify as trans are seeking out and receiving these diagnoses.)

On top of all this is the causality issue — after the publication of Turban et al.’s USTS-based Pediatrics study ostensibly showing a link between recalled access to blockers and better mental health, a number of critics noted that it simply could be that young people with more mental health problems were less likely to be allowed to access blockers, because during the time period in question it was quite common for American clinicians to follow professional guidance instructing them to reject kids for such medication on the basis of uncontrolled, ongoing mental health problems (like the Dutch did). If this theory is true, it would mean that poor mental health caused a lack of access to blockers (that is, poor mental health → skeptical clinician following the rules and turning down a request for blockers → no blockers), rather than that a lack of access to blockers caused poor mental health. The new study in PLOS ONE supposedly addresses this by concerning itself mostly with past-month rather than lifetime mental health problems, but the broader point stands: It’s extremely hard to make unidirectional causal claims on the basis of this data.

In their studies, Turban and his team tend to dutifully note that the differences they derive from USTS data-slicing could be attributed to other factors as well, and that it is difficult to establish clear causal relationships between recalled access to youth gender medicine and later outcomes. But inevitably, Turban will then give quotes to major outlets, or write articles for them, in which he presents his results as straightforwardly causal — to the extent the got-medicine group did better, it was because they got medicine. For example, Turban wrote in The Washington Post that “at least 14 studies have examined the impact of gender-affirming care on the mental health of youths with gender dysphoria and have shown improvements in anxiety, depression and suicidality,” and “suicidality” links to the PLOS ONE paper about hormones. (For the record, Turban’s “14 studies” link, to this article he wrote in Psychology Today, also reports the details of those studies rather selectively, in my opinion, leaving out some vital caveats and weaknesses.)

So, in short and even before we dig into any of the details, Turban et al.’s PLOS ONE study is based on a likely nonrepresentative data set of young people who don’t seem to know what medicine they were on and when, who might not know what medicine they wanted and when, and it relies entirely on self-reported assessments of their mental health. Moreover, many, including Turban, have simply assumed that certain correlations uncovered via this method were driven by causation, despite there being little reason to make this assumption. Again, imagine a study with these characteristics being used to prop up an alternative Covid treatment: Science Vs would be like a vulture that had just spotted a seriously ailing buffalo.

But let’s assume, for the sake of argument, that none of these red flags exist. Let’s summon all the charity and benefit of the doubt we can muster and imagine this is a clinical sample where we know, for sure, who went on which medicine and when, and that we are therefore in a somewhat better position to accept causal claims.

That in mind, here’s what the Turban team said they found:

For everything that follows, let’s stick to the adjusted odds ratio (AoR) and accompanying P-values. Outlined in green is the finding that Turban and his team highlight in their write-up, and that Science Vs mentions in its show notes: Among respondents who reported ever wanting hormones, those who recalled receiving them reported a lower probability of past-year suicidal ideation than those who did not recall receiving them.

The actual question, from Section 16 of the USTS: “At any time in the past 12 months did you seriously think about trying to kill yourself?” The respondents who answered yes were then prompted to answer questions gauging the seriousness of their suicidal ideation: whether they had a specific plan for ending their life, whether their suicidality required medical attention, and whether they ended up in the hospital as a result. This is standard practice, because suicide experts recognize a pretty big, potentially life-and-death difference between someone who reports having considered suicide and someone who develops a specific plan to commit it. (I volunteered at a suicide hotline forever ago and we were supposed to triage people in this manner as early in the call as possible by asking if they were suicidal, and then, if they were, following up with questions about whether they had a plan and, if they did, if they already had in their possession whatever items they would need to carry it out, such as a gun or a knife.)

If someone answered yes to the USTS question “At any time in the past 12 months did you seriously think about trying to kill yourself?” but reported having never made a plan, let alone an attempt, they’d be considered to be at a more moderate risk of suicide than someone who answered yes to those follow-up questions. That obviously doesn’t mean any such assessment would be foolproof, and of course the stakes here are terrifyingly high, but the point is there are important gradations when it comes to suicidal ideation. (I wanted to make sure I wasn’t downplaying the severity of a situation like this, so I reached out to a suicide researcher I know. She helpfully pointed me to this resource from the organization Zero Suicide, which is adapted from the VA’s Rocky Mountain Mental Illness Research Education and Clinical Center (MIRECC) for Suicide Prevention, and which suggests that a young person in this situation would probably be placed in the “low acute risk” category.)

Outlined in red are the results that go ignored by Turban et al. in their write-up, and also by Science Vs: Turban and his team ran eight statistical tests on these more serious measures of suicidal ideation and behavior and went zero-for-eight in terms of hitting their P < .001 threshold for statistical significance (they beefed it up from the standard P < .05 to account for all the comparisons they’re making, which is good practice in general). In almost every case, the coefficients hover around 1.0, which strongly suggests there’s just no link at all, among those who reported wanting to access hormones, between having recalled accessing them and having even a slightly lower risk of serious recent suicidal behavior or ideation. The cell that comes closest to hitting the significance threshold suggests that those who recalled wanting hormones and accessing them at age 16 or 17 were more than twice as likely to report having been hospitalized for a suicide attempt as those who recalled wanting but not accessing hormones. Whether or not you buy the idea that we should disregard this .01 P-value, there’s nothing here to suggest any promising findings with regard to a link between hormone access and the more serious measures of suicidal ideation and behavior.

One would think that enthusiastic advocates for administering gender-affirming medicine to young people would be concerned about these null results, but as far as I can tell the authors haven’t mentioned them anywhere, despite the extensive publicity the paper — or, perhaps specifically, one row of a seven-row chart — garnered. (See my March tweetstorm on this study here.) None of this is mentioned in the body of the paper itself.

Now, on the other other hand, the kids in the got-hormones group reported less past-month severe psychological distress than those in the non-hormones group as measured by another binary variable: whether they met a certain threshold on the Kessler 6 Psychological Distress Scale. So it’s not like there’s nothing in this study pointing in the direction Turban and his team want — it’s just that when it comes to the most serious measures, the findings are concerningly barren. (Readers of my April post will also remember that reporting continuous variables dichotomously is an easy way for important features of data to get obscured — by this standard, a two-point improvement on a 25-point scale that crosses a certain threshold can sometimes register as more meaningful than a ten-point improvement that doesn’t.)

In much the same way Turban and his team simply pretend these null findings don’t exist, Science Vs does the same. The average pop science consumer isn’t going to pull the study to check for themselves, so, mission accomplished: A bunch of people will think this study found impressive results for GAM, when in fact it’s a mixed bag at best and, by the researchers’ own methods and logic, runs counter to the claim commonly made by Turban and others that these medications are crucial for reducing serious suicidality and suicide attempts by transgender and gender nonconforming youth.

We can’t depart this study without making one more very important stop: this comment left on the PLOS ONE website by Michael Biggs, the aforementioned Oxford sociologist, and PLOS ONE’s response to it. Using the USTS data, Biggs attempted to replicate the Turban team’s findings as best as he could in light of the fact that the authors did not include sufficient statistical information in their paper for him to do so. Biggs notes that the study’s authors “have twice previously not replied to my requests to provide their command files,” and confirmed in an email to me that this concerned prior studies they published, and that he didn’t bother trying a third time for this study.

Biggs found a pretty straightforward statistical irregularity in the PLOS ONE study:

There are odd discrepancies between the raw frequencies reported by Turban et al. and the USTS dataset. According to the authors, 119 respondents reported beginning cross-sex hormones at age 14 or 15. But for the question 'At what age did you begin hormone treatment' (Q12.10), 27 respondents answered at age 14, and 61 answered at age 15, summing to 88. How did the authors obtain an additional 31 observations? It is not due to the imputation of missing values because the authors drop observations with missing values; the same procedure is followed here.

Biggs also notes that Turban’s team includes access to puberty blockers in their statistical models, but doesn’t report on that result. Biggs found that “Controlling for other variables, having taken puberty blockers has no statistically significant association with any outcome. This reveals that Turban et al.’s earlier finding from the USTS — which did not control for cross-sex hormones — is not robust.” So Turban appears to have called into question his own prior finding about puberty blockers, but this doesn’t garner even a brief mention in his paper. (Biggs also notes that Turban and his colleagues failed to control for other variables associated, at least in some studies, with enhanced well-being among trans people, such as access to gender-affirming surgery.)

But dwarfing all of these issues is what happened when Biggs broke out the results by natal sex:

Testosterone is consistently associated with better outcomes. Estrogen is associated with a lower probability of severe distress, but also with a higher probability of planning, attempting, and being hospitalized for suicide. The latter outcome is particularly disturbing: males who took estrogen have almost double the adjusted odds of a suicide attempt requiring hospitalization.

If Biggs is correct, then the data here provide an even more conflicting storyline — one in which a lot of the results aren’t just null, but point in the exact wrong direction. Turban and his team, by their own reasoning, would be forced to conclude that estrogen is dangerous for trans women from a suicidality perspective. (I’m not endorsing this view because I think the USTS and this methodology are both such messes that these studies don’t really tell us anything causal about the effects of gender-affirming medicine — I’m saying if you do accept these methods, data, and reasoning, you’re forced to take the good with the bad.) Either Turban’s team didn’t bother to check this, they did and then failed to report on these alarming results, or Biggs is incorrect.

This is potentially a big deal, especially considering the way Turban, the Stanford University hospital where he was then doing his residency, and so many media outlets have touted this study as solid evidence that access to hormones reduces suicidality. If Biggs is correct and accessing estrogen was in fact linked to “a higher probability of planning, attempting, and being hospitalized for suicide,” that absolutely requires further explanation from the authors. I emailed PLOS ONE and three of the study’s authors — Turban, Sari L. Reisner, and Alex S. Keuroghlian — to ask about this. I got a quick response from a PLOS ONE media associate saying, “I can see that you have reached out to the authors, and I hope they will be able to respond to you soon,” but after I didn’t hear back from the authors themselves, I followed up with the journal again.

I heard back from a senior communications associate, who told me that PLOS ONE is going to be examining Biggs’ claims. He also pointed me toward a newly posted “Editor’s Note” which reads “PLOS ONE is looking into the questions raised about this article. We will provide an update when we have completed this work.” I’ve asked Turban for his team’s code in the meantime, and according to PLOS ONE policy he’s required to provide it (haven’t heard back yet). The code will only be so useful without the raw data, which is unfortunately obtainable only via a data-sharing agreement with the The National Center for Transgender Equality, but it would be a start. While it would be nice to figure out what’s up with that statistical discrepancy, I think the most important thing, by far, is simply to confirm whether Biggs is correct about what happens when these results are separated by natal sex. If he is correct, I would argue that that would require immediate action on the part of the authors of this study to get the word out about what would be — if you buy their logic — an extremely alarming finding. We’ll see how long the process takes and what results PLOS ONE will be willing to provide afterward. (If you’re a researcher who has experience working with this type of data and you want to try to get your hands on it, please let me know.)

If Biggs is correct, the sex-disaggregated results would call into question even the seemingly positive findings on the female-to-male side. Because, as he notes, testosterone has established antidepressant properties (Biggs cites this meta-analysis, though for understandable reasons it includes only studies conducted on natal males), it would be very hard to suss out what’s what here. Even assuming causality flows the way Turban’s team wants it to, did the trans men in this study experience improvement because of the specific nature of gender-affirming medical treatment, or because anyone — particularly someone dealing with preexisting mental health problems — would feel better from regularly taking T?

Summing Things Up

Science Vs viewed Tordoff et al. (2022) as a mere replication of something we already know: that puberty blockers and cross-sex hormones are linked to improved mental health in trans kids. This, the show argued, is not controversial. And, according to host Wendy Zukerman, “[T]he reason that it’s not controversial is because — again — we need to look at what happens if you do nothing. Like you don’t allow your kid to go on hormones.” Bad things happen to their mental health — potentially dangerous things.

To support this view, Zukerman and her colleagues cited seven studies in total. For reasons ranging from complete irrelevance to the question at hand to broken comparison groups to genuinely unimpressive results, I’d argue that there is not a single study in the bunch that strongly supports Zukerman’s claim that a failure to put trans kids and youth on blockers or hormones causes harm, and that none of them strongly supports the related claim that blockers and/or hormones cause significantly improved mental health in trans kids and youth.