That New Report On Incels Is A Cherry-picked, Misleading Mess

Incels are creepy, frequently offensive, and occasionally violent, but this is exceptionally poor and politicized research

How scared should you be of “incels”?

Very, if a new report (PDF) from the Center for Countering Digital Hate (CCDH) is any indication. This is a very important report that we should all pay attention to, according to the alarmed media coverage it garnered in outlets like The Washington Post, The Hill, and Insider.

Incels actually came up a few Singal-Mindeds ago, in the context of my complaining about poor and credulous journalistic coverage of creepy and/or disfavored and/or trollish online communities like Kiwi Farms:

Same deal with coverage of “incels” — involuntary celibates, or men frustrated about their inability to find women who will have sex with them. A very small percentage of incels, most famously Elliot Rodger, engage in acts of violence or harassment. But mostly they are this sprawling sad-sack community of disaffected young men with a diversity of views on various subjects, including women. There are creepy men’s rights activist incels, but there are also more straightforwardly tragic ones, hobbled by physical or mental disabilities, who blame themselves rather than women for their shortcomings.

The coverage of this community was so bad! “Incel” came to be synonymous with “terrorist,” to the point where Ellen Pao, the former CEO of Reddit, inadvertently posted one of the best tweets of 2018, suggesting, to the extent I can understand it, that tech companies begin making lists of — and monitoring — their virgin staffers.

There have been only sporadic attempts only on the part of journalists — this Reply All episode and the podcast Incel by Naama Kates, which I haven’t listened to as much of as I’d like, are two examples — to treat the incel phenomenon as anything but another thing to get morally outraged about.

Thank you for the excellent timing, Center for Countering Digital Hate. The report is titled “The Incelosphere: Exposing pathways into incel communities and the harms they pose to women and children.” Very scary stuff from the CCDH’s “Quant Lab.”

The authors did take a highly quantitative approach. They didn’t really dig deeply into the online forums in question, let alone attempt to interview those who post to them. Rather, they scraped data from more than a million posts to these forums so they could evaluate how common certain words and phrases and themes were.

Look: A lot of incels are creepy. The creepiest and most disturbed incels do, very rarely, commit horrific acts of violence. It’s not a nonexistent threat. But there’s a lot of danger in the world, and people aren’t always good at appropriately evaluating and triaging risks, so I think there’s always a danger that some shiny new threat will attract more attention than it should. There’s some dispute over how many real-life killings and assaults can be fairly attributed to incels per se — we’ll get back to that. But right off the bat it’s worth noting that even a liberal estimate of this number — 100 — would be dwarfed, by almost an order of magnitude, by the number of murders in Chicago in 2021 alone, and is far lower than the number of Americans who succumb to Covid every day.

That said, there’s nothing inherently wrong with studying online venues where extremism flourishes, or at least isn’t stigmatized. It’s important, because some people do get radicalized in these forums, and/or some people who post there are already radicalized and/or are on the verge of committing a violent act. Some incel forums certainly qualify for this sort of study. But the particular analysis offered by the CCDH Quant Lab is so fuzzy, and bears so many marks of exaggeration, that it’s hard to know what to make of it.

I’m not going to give it a full fisking, but rather highlight some of the reasons I think we should be very skeptical. Then, at the end of the post — stay tuned! — I’ll bring in the thoughts of a journalist who knows a lot about incels and about the main forum featured here, Incels.is (they anonymize it, but you can just Google some of the quotes they include and see that’s what it is).

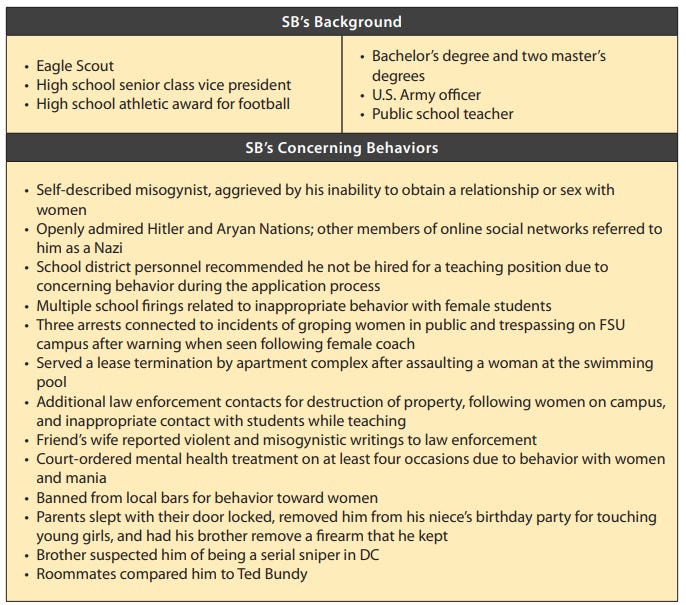

There’s a large warning sign very early on in this report. The authors note that “The Secret Service National Threat Assessment Center (NTAC) said in March 2022 that incels are a rising threat in the US.” Wow — incels are such a big problem that the Secret Service is singling them out? Scary, if true. But it isn’t true. The citation points to an NTAC case study about a horrible incident: “On November 2, 2018, a 40-year-old gunman opened fire inside Hot Yoga Tallahassee, a yoga studio in Tallahassee, FL, killing two women and injuring four more before committing suicide.”

The perpetrator was a deeply disturbed, violent, possibly pedophilic individual with signs of ultraright politics. This situation was the exact opposite of the befuddled neighbor saying “But he was such a nice boy!”:

While the report mentions incels, it does so in a broader context, in effect saying that this is one subgroup of a broader misogynistic culture worth keeping an eye on: “The Hot Yoga Tallahassee attacker did not appear to adopt any of these specific ideological labels, but his behavior and beliefs aligned with many who do. Although these labels and their origins vary, they all have proponents who have called for violence against women.”

So it’s just inaccurate to describe this NTAC case study as a report that singled out incels as a rising threat. And if the CCDH researchers can’t summarize a brief report accurately, what else are they getting wrong or intentionally obscuring?

Along those same lines, early on the researchers explain that while incels started as a more innocent online support group, they “have since become predominantly male-only spaces that blame their members’ problems on women, promoting a hateful and violent ideology linked to the murder or injury of 100 people in last ten years, mostly women.” That number jumped out at me — I hadn’t seen anyone suggest such a high estimate. The citation is to a page in a book — 2021’s Men Who Hate Women: From Incels to Pickup Artists: The Truth about Extreme Misogyny and How it Affects Us All, by Laura Bates. I was able to grab an EPUB, and early on there is indeed the following passage:

The incel community is the most violent corner of the so-called manosphere. It is a community devoted to violent hatred of women. A community that actively recruits members who might have very real problems and vulnerabilities, and tells them that women are the cause of all their woes. A community in whose name over 100 people, mostly women, have been murdered or injured in the past ten years. And it’s a community you have probably never even heard of.

There are citations in the book, but none connected to this claim. It’s unclear where she got it.

This is sorta interesting: The CCDH report has “Quant Lab” on the front page. It talks about “data scraping.” The goal is to give the impression of dispassionate data analysis — just the facts, ma’am. But an early, key claim designed to focus the reader’s attention, to say This is a serious problem, is supported by a citation to a book that in turn doesn’t offer any citation to support it. Just seems sloppy.

The report also suffers from this weird cherry-picking that made me suspicious before I got too deep into the report. “Forum members post about rape every 29 minutes, and examination of discussions of rape shows that 89% of posters are supportive.” Again, that doesn’t sound good.

But I noticed that the researchers flipped between different ways of describing the prevalence of certain terms and themes:

• Over a fifth of posts in the forum feature misogynist, racist, antisemitic or anti-LGBTQ+ language, with 16% of posts featuring misogynist slurs.

• Forum members post about rape every 29 minutes, and examination of discussions of rape shows that 89% of posters are supportive.

Wait, so sometimes it’s reported as a percentage of posts, and sometimes as a frequency… why? The answer, which you won’t get until you read much deeper, is that only 1.6% of posts mention rape:

And of course this is super zoomed-out. While this forum does contain horrible content about how women should be raped — don’t worry, I promise I’ll link to it shortly, lucky you — the actual percentage of posts containing this sort of threat, rather than a mention of “rape” or its variants in other contexts, is likely significantly lower than 1.6%.

On the other hand, “examination of discussions of rape shows that 89% of posters are supportive.” That sure sounds bad. So they looked at all the rape mentions and saw that overwhelmingly, the respondents to posts about rape were pro-rape? No. The researchers cherrypicked two specific threads on the forums. “Analysis of the comments on two of these threads revealed that 89% of those who had a stance on the issue were in support of the original poster, with 5% against.” How did they choose those two threads? They don’t say. Did they find similar patterns in other threads? They don’t say. Again, it’s this thing where there’s a scary headline finding — that 89% figure — but then you get deeper into the muck of their methodology and realize that there are unanswered questions. (Here’s one of the two threads in question — it’s awful, but if you’ve been around the internet block, you will recognize a lot of the content as desperate, attention-grasping LARPing. Any disillusioned, lonely young asshole can type a few words about how cool rape is and bask in the affirmation they get from other disillusioned, lonely young assholes.)

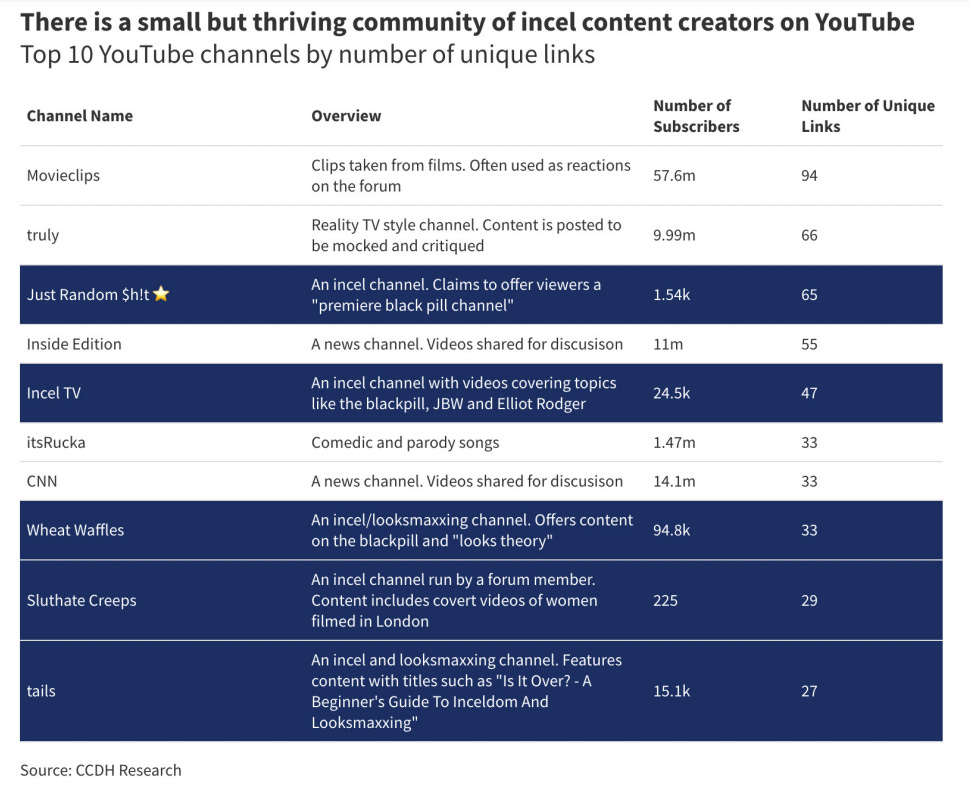

Other claims in the report sound menacing but are virtually contentless. For example, one subheadline reads “The Incel Forum has links to mainstream social media platforms.” Then there’s a graph showing that of the more than a million posts, a small fraction of them link to YouTube or Reddit.

O… kay? YouTube and Reddit are two very popular social media sites, so of course people are going to link to them. In fact, you could take the sentence “The Incel Forum has links to mainstream social media platforms,” swap out “The Incel Forum” for probably 99.99% of the forums that exist on the internet, and the sentence would be true.

What sorts of YouTube videos are Incels.is users linking to? Here you go:

That looks like a tiny number of total links given the sheer volume of posts. By my count, 482 links to YouTube videos in 1,183,812 posts.

What are we to make of this? A channel like Just Random $h!t ⭐, with a grand total of less than two thousand subscribers — tiny by YouTube standards — is going to get far more attention from being featured in this report than it otherwise would have. And if you go there, you’ll see it’s extremely random, low-quality, oftentimes clearly stolen content (the most viewed video, by far, consists of stolen clips from what appears to be an OnlyFans star).

Then there’s the YouTube channel Sluthate Creeps, which the researchers describe as a major cause for alarm. “SlutHate Creep [sic — they cannot even get the name right], one of the popular channels on the forum we studied, is designed to denigrate covertly filmed women,” notes the CCDH. “It should never have lasted so long.” Later in the report, several breathless paragraphs are devoted to it, and specifically to the claim of random people being unwittingly filmed. The Washington Post’s coverage of this report echoes the language that this is a “popular” YouTube channel.

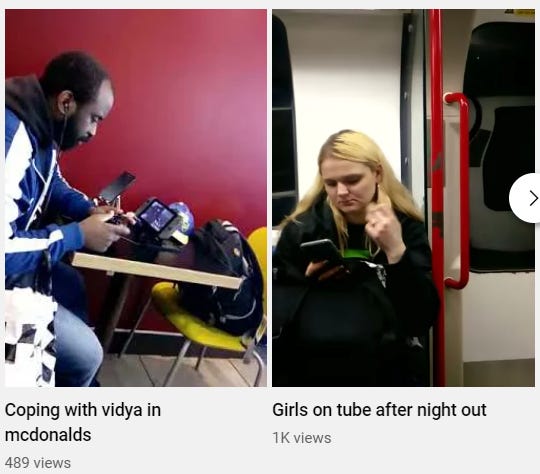

This channel has 225 subscribers! It barely exists. I guess it’s “popular” in the sense of being in the top 10 most-linked-to YouTube channels on Incels.is, but there were 29 unique links to it out of more than a million posts! It isn’t popular in any reasonable sense of the word. And while the above chart claims an Incels.is forum member runs it (no evidence is provided to support this claim), it honestly comes across like an automated channel run by an algorithm — it is exceptionally random. There’s one video of girls on a train in the Shorts feed, yes, but that section has a bunch of short clips of random people out in public, with no discernible theme: To the left of the girls-on-train video in the Shorts feed is… “Coping with vidya in mcdonalds,” which is just a short video of a guy playing video games at McDonald’s (489 views).

To the right of the girls-on-train video is… “Bum rap,” a brief interaction with a possibly homeless guy. Also on the channel: a five-second clip of Borat telling a prim dinner party “I like shaved pussy” and some sort of comedy sketch about “Russian online tramp racing.”

There’s definitely some incel stuff on Sluthate Creeps, albeit generally draped in layers of drama and controversy I couldn’t penetrate. So I suppose this is technically an incel channel, even if the incel stuff is sprinkled with a bunch of other nonsense. But the claim that the channel is designed “to denigrate covertly filmed women” is just false, because there’s genuinely no pattern to the random videos of people being filmed in public.

If incels are such a grave threat, and if, as this report suggests, there’s an important link between Incels.is and YouTube, why all the freaking out about a miniscule, random channel that isn’t even solely dedicated to incel-related subjects? Sluthate Creeps has been around since 2015 and has generated just over 100,000 total views — far less, in its entire existence, than a single moderately successful YouTube video. What is the point of this? What are we supposed to be worried about?

Let me put this all together:

The researchers examine more than a million posts on a major incel forum.

They note that this forum “has links to mainstream social media platforms,” most of all YouTube.

They find barely any links to incel content on YouTube, so they dress up what they do find to be as menacing as possible, in one case describing a YouTube channel in a very selective manner, obscuring from readers its randomness, and the fact that it’s posting short videos of various random people, not just women and/or interracial couples.

Quant Lab!

***

While this report unsurprisingly calls for a lot of deplatforming and deranking on the part of YouTube and Google — interventions I am generally skeptical of, for multiple reasons — there are, to be fair, reasonable tidbits as well. For example:

Moonshot, an organization that provides solutions on digital violent extremism, proposed the following interventions in its 2021 report on incels:

• Create an online-offline referral mechanism to offer support services and resources directly to at-risk individuals, offering reassurances on privacy.

• Promote support services, positive messages and events through influential personalities online.

• Initiate one-to-one interventions through direct messaging and relationship building.

• Create alternative spaces for at-risk users to share grievances in a healthy environment.

• Invest significant time and resources in outreach and offer aftercare.

• Incorporate incel awareness into existing programming.

• Create a practitioner network for knowledge sharing and intervention support.

Sure. Seems like decent bang for the buck. There should be moles in these forums who attempt to engage with their members, particularly those who seem closest to suicidal or homicidal precipices.

But overall, this is a really sloppy report. And it reminded me of other, similarly sloppy reports I’ve written about in the past, like this one from the U.N. about online harassment and this ADL one on anti-Semitism and this Amnesty one on harassment against women. In every case, it simply seems like the organizations set out to find that the threat they were researching was, in fact, quite serious — even if the data didn’t support that narrative, or even if there’s no consensus on what constitutes evidence supporting that narrative.

When I found out about the Center for Countering Digital Hate’s report, I’d already been DMing with Naama Kates, the aforementioned host of the podcast Incel, about doing an interview with her for this newsletter (if you have a question for her, leave it in the comments). Kates knows a lot about incels and has interviewed many of them.

I asked for her thoughts on this report and she sent me an appropriately salty email:

So I've written about this a bunch for UnHerd and I'll link you a couple of those here (they're each like 500 words so v short) because I've written about that very organization and their ridiculous studies before. This is what they do.

https://unherd.com/thepost/another-set-of-panicked-headlines-about-incels/

https://unherd.com/thepost/no-children-arent-being-ensnared-by-the-far-right/

https://unherd.com/thepost/vice-news-has-an-incel-problem/

These studies that are done by "scraping forums" and calculating "toxicity scores" or what not, imply that the use of certain kinds of language on internet forums is somehow predictive or indicative of real-world violence, or some kind of deep "hate." This is a faulty premise, there is no established correlation between "toxic language" and actual behaviors or beliefs, let alone radicalization; the metric was invented by these people themselves. "Toxicity score." Honestly. Furthermore, these figures can be calibrated and interpreted in nearly infinite ways (as I discussed in the "panicked headlines" piece); the researchers can choose what other websites to compare, what intervals of time to present, what combinations of words to determine indicate "hate." Finally, they are completely missing the context and culture of the "speech" they are analyzing. They decide that terms like "curry" and "foid" are "slurs," and then write about how there are 5 slurs per minute or whatever. It's nonsense. This community, this subculture use as those descriptively, humorously, they don't intend or find offense by them. And Indian kid referring to himself as a "currycel" is not a sign of hatred just because the researchers don't like it. [bolding mine]

These ridiculous "forum scrape," "linguistic analysis," "word cloud," "machine learning algorithm" studies of a goddamn website were done ad nauseum in 2018, 2019, by students etc. It was the only existing literature on the topic when I started this. They are useless. To date, a few studies have been done of the actual communities, with surveys, using things like the radical beliefs scale developed by Sophia Moskalenko. They have turned up no link between use of the forum or the black pill and radicalization -- though they have found a link between those things and misogyny, and other factors. These are studies by Sophia Moskalenko, William Costello, Ken Reidy, Mia Bloom. I can link if you want.

It's like the real studies turned up nothing so they decide to go back to the forum scraping so they could tweak it to give them their predetermined, desired outcome, and justify the expense of their "new quant lab." What a joke. They also named the site owners, for no apparent reason, whose identities were doxxed by a disgusting NYT piece that I also wrote about (https://unherd.com/thepost/the-nyts-latest-hit-job-backfires/), when, isn't this supposed to be about "radicalization" and "extremism"? It feels so petty and personal. They also misrepresented the new, very strict rules about [child abuse sexual material] as though they were somehow allowing CASM? They have never allowed it, and forward it to the police if it somehow ends up in the DMs.

I know exactly which sites they're talking about, and other than incels.is, the others in question are not actually incel forums and are the same 5 people over and over for years. If they actually LOOKED at the content they were "analyzing painstakingly for 18 months" instead of just feeding it to some shiny useless algorithm, they'd know that.

I am not knowledgeable enough to vouch for all of this, but it’s quite interesting, and I thought the bolded part was absolutely vital — a better-expressed version of something I noticed as I read the report. This is a version of the problem with “researcher degrees of freedom,” which has come up before in this newsletter. Basically, if you are implicitly or explicitly committed to reaching a conclusion before you start analyzing data, and you have a lot of data to analyze, and you have no guardrails about how you’ll analyze that data or what even constitutes evidence for or against your hypotheses, you’ll always be able to find what you’re looking for, even if it isn’t really there.

All these risks run higher when the research is conducted by an activist organization. In theory, peer review offers some quality control (although, on the other hand, LOL), but the Center for Countering Digital Hate can do whatever research it wants, with as little QC as it wants, publish it, and then pitch it to journalists. There’s no independent evaluation, not even of a pro forma variety.

Journalists should be more skeptical of this stuff, is what it all comes down to. As it so often does.

Questions? Comments? Your favorite posts of mine (jsingal69) from Incels.is? I’m at singalminded@gmail.com or on Twitter at @jessesingal. The image is of the original Forever Alone guy, via Know Your Meme.

The WaPost piece that regurgitated the report’s conclusions was written by Taylor Lorenz. Who could have guessed?

This reminds me of this report called "Exploring Extreme Language in Gaming Communities" which counted "border" as a racist word: https://gnet-research.org/2022/01/20/exploring-extreme-language-in-gaming-communities/