Let's Look Closely At Some Of Marty Seligman's Claims About The Penn Resilience Program

There is a lot less there than he thinks

This is an addendum to an article I wrote for The Chronicle of Higher Education. In that one, I responded to an article Marty Seligman wrote in response to another article I wrote that was adapted from my book.

Got all that? Great.

My editor asked me to move this part to my newsletter because things had already gotten fairly long and in the weeds. I’m fine with that, though I do think what follows is a telling example of how those who sell psychological interventions can subtly distort the research supposedly underpinning him.

Here’s the excerpt in question (numbers added by me):

Singal concentrates his attack on the fact that some of the evidence that the Army evaluated in adopting the Penn Resilience Program came from children and adolescents in school. This was too remote from adult soldiers in combat, Singal claims, even though the targets of PRP are preventing anxiety and depression.

Singal tells the reader about one early meta-analysis that concluded that no evidence was found that these programs reduce anxiety and depression, and another that has both positive effectiveness evidence and no effectiveness evidence, depending on the variable analyzed. So he indicts me for a “tendency to overclaim.” But he fails to tell the reader about the three more complete and more recent meta-analyses that clearly show that these programs work.

The most recent and most comprehensive (1)meta-analysis, published in 2020 in the Journal of Affective Disorders, finds these programs to be effective. The authors reviewed 38 controlled studies, including 24,135 individuals. At postintervention, the mean effect size was significant, and subgroup analyses revealed significant effect sizes for programs administered to both universal and targeted samples, programs both with and without homework, and programs led by teachers. The mean effect size was maintained at six months’ follow-up, and subgroup analyses indicated significant effect sizes for programs administered to targeted samples, programs based on the Penn Resiliency Program, programs with homework, and programs led by

professional interventionists.

Similarly, a 2015 (2) meta-analysis in The Journal of Primary Prevention examined 30 peer-reviewed, randomized or cluster-randomized trials of universal interventions for anxiety and depressive symptoms in school-age children. There were small but significant effects regarding anxiety and depressive symptoms as measured at immediate posttest. At follow-up, which ranged from three to 48 months, effects were significantly larger than zero regarding depressive (but not anxiety) symptoms.

A (3) 2017 meta-analysis in the Journal of the American Academy of Child and Adolescent Psychiatry reviewed 49 studies. For all trials, resilience-focused interventions were effective relative to a control in reducing four of seven outcomes: depressive symptoms, internalizing problems, externalizing problems, and general psychological distress. For child trials (meta-analyses for six outcomes), interventions were effective for anxiety symptoms and general psychological distress. For adolescent trials (meta-analyses for five outcomes), interventions were effective for internalizing problems.

Seligman is, again, being a little bit slippery — when he references studies which “clearly show that these programs work,” he is referring to a bucket of studies covering not only PRP, but also other programs geared at reducing anxiety and depression in young people. To know how strongly these meta-analyses support Seligman’s claim that I am understating the research, we need to dig into them and see how much they have to do with PRP in the first place, because, again, my claim here is just about the Penn Resilience Program — the program CSF was largely built from.

And when you look at these three meta-analyses, they simply don’t offer particularly impressive evidence for PRP. In fact, they’re right in line with what I’ve been saying all along, and what the program’s creator herself found in the meta-analysis she coauthored: Yes, there’s some evidence PRP leads to statistically significant improvements in mental-health outcomes, but due to the small effect sizes it’s questionable whether these are clinically significant, and there’s no good reason to expect a PRP-style program to achieve even this level of effectiveness in a setting as different as the U.S. Army (much older, not to mention overwhelmingly male, though I don’t think I mention the sex issue in my book), let alone to accomplish the taller task of preventing PTSD and suicide. I can’t put it better than Nick Brown did in his article in The Winnower, in a quote I included in my original piece: “The idea that techniques that have demonstrated, at best, marginal effects in reducing depressive symptoms in school-age children could also prevent the onset of a condition that is associated with some of the most extreme situations with which humans can be confronted is a remarkable one that does not seem to be backed up by empirical evidence.”

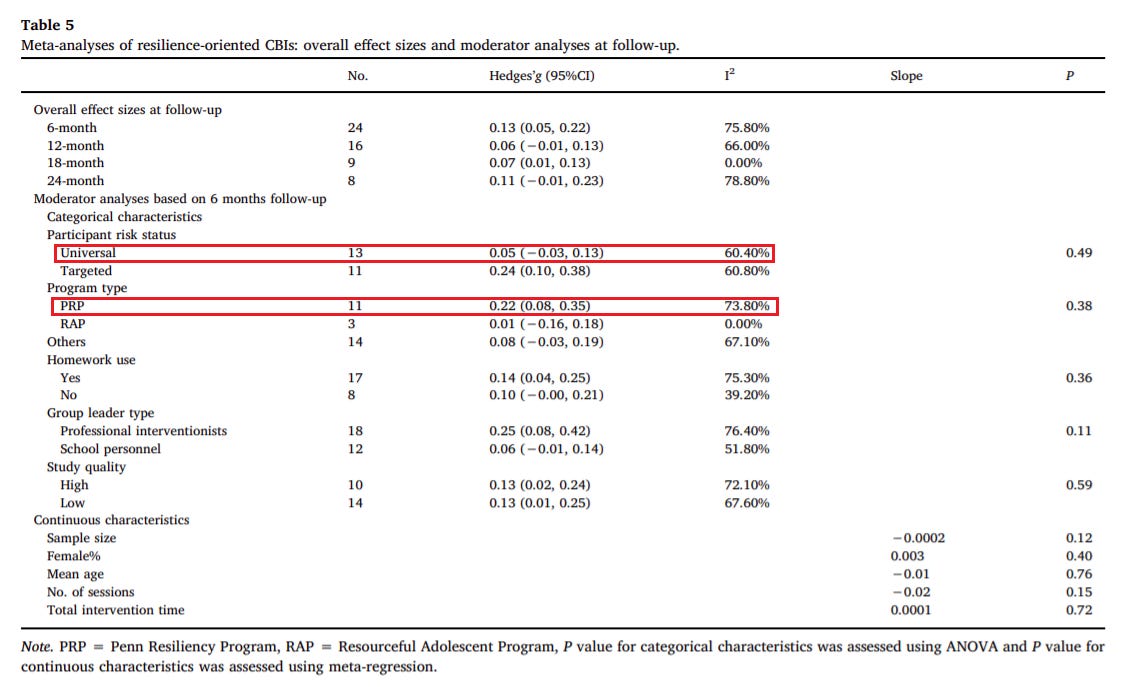

But let’s look at the meta-analyses themselves. Table 5 of meta-analysis (1) reveals that of the included studies, 11 were of PRP and presented longer-term (rather than just immediate post-intervention) data. The average effect size was a Hedge’s g of .22. This is considered small. Not ‘nothing’ or “non-existent,” but small. One problem is that there is also significant heterogeneity (I² of 73.80%, for the nerds), and if you go back to Table 2 to get more information about the studies, you’ll see that several of the PRP studies with the more impressive effect sizes at followup on the list, like Cardemil et al, 2002 (0.78), Wijnhoven et al, 2015 (0.36), and Yu and Seligman, 2002 (0.45) were conducted on targeted, not universal populations. This is a crucial distinction, because the entire premise of CSF is that it can be given to everyone. It’s reasonable to expect that you might get larger effect sizes with targeted programs because of regression to the mean and other fairly predictable statistical effects, but the point is even 0.22 — already ‘small’ — might be an overestimate of PRP’s true effect size in universal contexts. Sure enough, the Hedge’s g of all universal studies in the meta-analysis (including both PRP and others) at followup was just 0.05, veering toward ‘tiny.’ All of which suggests that even the ‘small’ effect observed here might be overstating the evidentiary base for PRP in CSF-style universal settings, though to know for sure we’d have to average the effects of all the universal PRP interventions evaluated in this study, which I’m not going to do at the moment because we are already so deep in the weeds we cannot tell if it is day or night.

Meta-analysis (2), meanwhile, looked at just three PRP programs out of a total of 30 programs evaluated. Seligman argues that “At follow-up, which ranged from three to 48 months, effects were significantly larger than zero regarding depressive (but not anxiety) symptoms.” This isn’t true for the PRP programs — Table 2 shows no statistically significant effects on depression symptoms at post-intervention or follow-up.

This could be a sample-size issue, but still: This is a strange meta-analysis to present as evidence that PRP works.

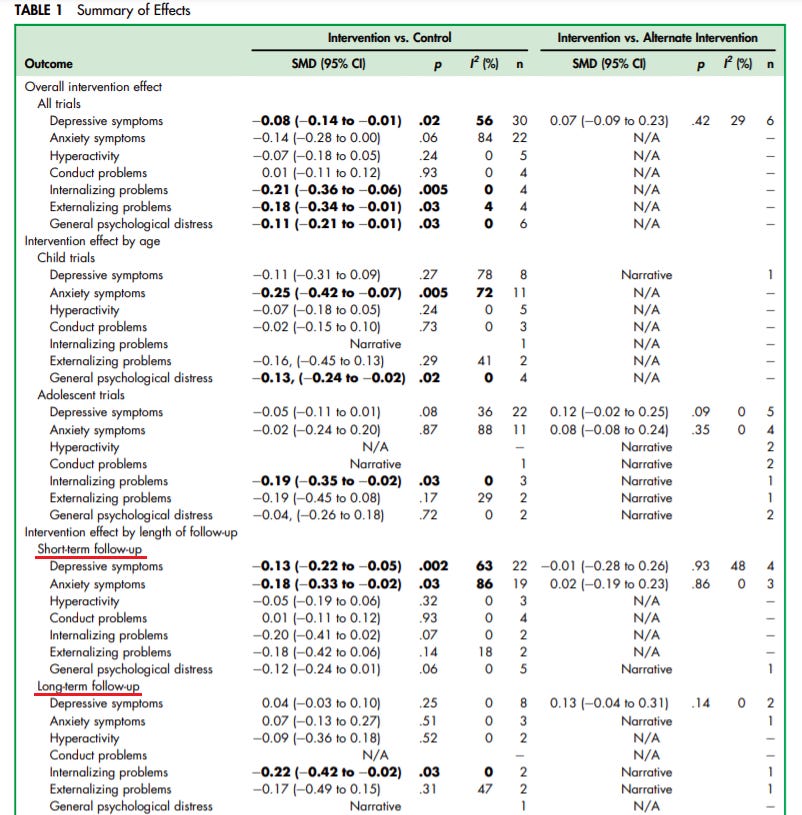

As for meta-analysis (3), it doesn’t break things down in a way that makes it easy to average out the effects of PRP-based programs versus others, but as Table 1 shows, among all the programs the authors evaluated, there were no statistically significant effects on depression or anxiety in the long term. (Short-term was a mixed bag with modest effect sizes.)

Part of what’s going on here, with regard to both the claims I’m responding to in this post and those in the article from which it sprung, is Seligman is taking a throw-it-all-at-the-wall-and-see-what-sticks approach to the evidence question. It seems that everything is considered to count as evidence for Comprehensive Soldier Fitness or the Penn Resilience Program — even studies not evaluating them directly, or studies that find marginal or nonsignificant results. This study finds a bit of a hint of something on anxiety; this one on depression; this other, non-peer-reviewed on on addiction. All of them count as evidence for CSF and/or PRP, none of them, despite hte frequency of small or null results, count against either program. It’s a fairly well-known fact of statistics, especially in the replication-crisis era, that if you test enough things, and slice your data up over and over like you’re at a deli counter, you’re going to get some positive hits, and Seligman just jumps between the hits, presenting them all as evidence for his thesis in a way that ignores a whole lot of null and underwhelming findings.

Seligman’s Positive Psychology Center has been selling the PRP and its variants not just to schools but to other institutions for years now, and these interventions surely help the PPC to be “financially self-sustaining and [to] contribute[] substantial overhead to Penn,” to borrow from language that has shown up repeatedly in the Center’s annual report. But the evidence for the PRP is highly questionable. And, to repeat a crucial point, when you drag these interventions out of the classroom and into adult settings, you have an even shakier evidentiary base to work with — especially if your goal is to reduce PTSD and suicide in an extremely at-risk population. None of what I am saying is scientifically controversial, and Seligman didn’t really respond to my actual argument, likely because he doesn’t have the evidence he would need to do so.